MySQL高效分页与分页优化分析

发布时间:01/15 来源: 浏览:

关键词:

在mysql中分页优化是一个老生常谈的问题,如果我们几万条数据直接使用limit不需要做任何操作即可完成分页并且性能还不错,但是百万千万级数据我们就需要对limit进行有效的优化才能完成高效分页了,要不就会卡死数据库哦。

在Percona Performance Conference 2009大会上来自yahoo的Surat Singh Bhati (surat@yahoo-inc.com) 和 Rick James (rjames@yahoo-inc.com)给大家分享了MySQL高效分页的经验。

一、概述

-

常见分页方式

-

schema设计和常见的分页方式(偏移)

-

避免分页偏移过大的技巧

-

性能对比

-

重点

二、常见分页方式

三.前提

大记录表要高效分页

-

WHERE条件使用索引完成

-

WHERE条件和排序能够使用同个索引完成

-

基础知识

-

http://dev.mysql.com/doc/refman/5.1/en/mysql-indexes.html

-

http://dev.mysql.com/doc/refman/5.1/en/order-by-optimization.html

-

http://dev.mysql.com/doc/refman/5.1/en/limit-optimization.html

索引 a_b_c (a, b, c)

下面的查询可以使用索引来解决ORDER部分:

-

ORDER BY a

-

ORDER BY a,b

-

ORDER BY a, b, c

-

ORDER BY a DESC, b DESC, c DESC

下面的查询可以使用索引来解决WHERE和ORDER部分::

-

WHERE a = const ORDER BY b, c

-

WHERE a = const AND b = const ORDER BY c

-

WHERE a = const ORDER BY b, c

-

WHERE a = const AND b > const ORDER BY b, c

下面的查询无法使用索引完成,需额外排序:

-

ORDER BY a ASC, b DESC, c DESC /* 混合ASC和DESC */

-

WHERE g = const ORDER BY b, c /* 字段g不是索引一部分 */

-

WHERE a = const ORDER BY c /* 没有使用字段b */

-

WHERE a = const ORDER BY a, d /* 字段d不是索引的一部分 */

四、Schema 设计

| 代码如下 | |

CREATE TABLE `message` ( `id` int(11) NOT NULL AUTO_INCREMENT, `title` varchar(255) COLLATE utf8_unicode_ci NOT NULL, `user_id` int(11) NOT NULL, `content` text COLLATE utf8_unicode_ci NOT NULL, `create_time` int(11) NOT NULL, `thumbs_up` int(11) NOT NULL DEFAULT '0', /* 投票数 */ PRIMARY KEY (`id`), KEY `thumbs_up_key` (`thumbs_up`,`id`) ) ENGINE=InnoDB mysql> show table status like 'message' G Engine: InnoDB Version: 10 Row_format: Compact Rows: 50000040 /* 5千万 */ Avg_row_length: 565 Data_length: 28273803264 /* 26 GB */ Index_length: 789577728 /* 753 MB */ Data_free: 6291456 Create_time: 2009-04-20 13:30:45 |

|

-

按照time(发布时间)分页,新发布的在前面

-

按照thumps_up(投票数)分页,票高的在前面

五、典型的分页查询

1.统计记录数量

1.统计记录数量

| 代码如下 | |

SELECT count(*) FROM message |

|

| 代码如下 | |

SELECT * FROM message ORDER BY id DESC LIMIT 0, 20

ORDER BY id DESC LIMIT 0, 20

ORDER BY id DESC LIMIT 20, 20

ORDER BY id DESC LIMIT 40, 20

|

|

六、explain

| 代码如下 | |

mysql> explain SELECT * FROM message ORDER BY id DESC LIMIT 10000, 20G ***************** 1. row ************** id: 1 select_type: SIMPLE table: message type: index possible_keys: NULL key: PRIMARY key_len: 4 ref: NULL rows: 10020 Extra: 1 row in set (0.00 sec) |

|

-

它可以使用索引,并且只要找到需要的结果后就停止扫描.

-

LIMIT 10000, 20 需要读取前10000行,然后获取后面的20行

六、瓶颈

-

较大的偏移(OFFSET)会增加结果集, MySQL has to bring data in memory that is never returned to caller.

-

Performance issue is more visible when your have database that can’t fit in main memory.

-

小比例的低效分页足够产生磁盘I/O瓶颈

-

为了显示“第 21条 至 40条 (共 1000000),需要统计1000000行

七、简单的解决方法

-

不显示记录总数,没用户在乎这个数字

-

不让用户访问页数比较大的记录,重定向他们

八、避免count(*)

-

不显示总数,让用户通过“下一页”来翻页

-

缓存总数,显示一个大概值,没有用户在乎是324533条还是324633 (译:测试在乎-_-!!)

-

Display 41 to 80 of Thousands

-

单独统计总数,在插入和删除时递增/递减

九、解决偏移查询

-

更改ui,不提供跳到某页的按钮

-

LIMIT N 是高效的, 但不要使用 LIMIT M,N

-

从WHERE条件里找到分页(LIMIT N)的线索

-

Find the desired records using more restricted WHERE using given clue and ORDER BY and LIMIT N without OFFSET)

-

十、寻找线索

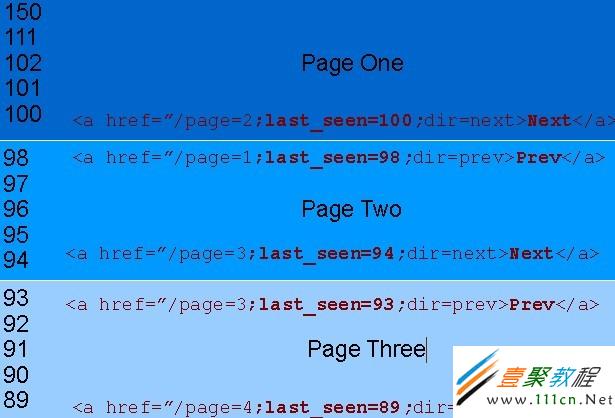

译:last_seen是id。这里的分页只有“上一页”、“下一页” 按钮

十一、根据线索解决方案

下一页:

十一、根据线索解决方案

下一页:

http://domain.com/forum?page=2&last_seen=100&dir=next

WHERE id< 100 /* last_seen */ ORDER BY id DESC LIMIT $page_size /* 没有偏移 */

上一页:

-

http://domain.com/forum?page=1&last_seen=98&dir=prev

WHERE id > 98 /* last_seen */ ORDER BY id ASC LIMIT $page_size /* 没有偏移 */

译:通过每页第一条或最后一条记录的id来做条件筛选,再配合降序和升序获得上/下一页的结果集

十二、根据线索解决方案

十二、根据线索解决方案

| 代码如下 | |

mysql> explain SELECT * FROM message WHERE id < '49999961' ORDER BY id DESC LIMIT 20 G *************************** 1. row *************************** id: 1 select_type: SIMPLE table: message type: range possible_keys: PRIMARY key: PRIMARY key_len: 4 ref: NULL Rows: 25000020 /* 忽略这里 */ Extra: Using where 1 row in set (0.00 sec) |

|

| 代码如下 | |

|

99

99 98 第一页 98 98 98

98 97 第二页 97 10 |

|

我们不能这样查询:

| 代码如下 | |

WHERE thumbs_up< 98 ORDER BY thumbs_up DESC /* 结果将返回重复的记录 */ |

|

| 代码如下 | |

WHERE thumbs_up <= 98 AND <额外的条件> ORDER BY thumbs_up DESC |

|

-

考虑到 thumbs_up 是“主要字段”,如果我们添加一个“次要字段”,我们可以使用“主要字段”和“次要字段”作为查询条件

-

其次,我们可以考虑使用id(primary key)作为我们的次要字段

十五、解决方案

第一页:

第一页:

| 代码如下 | |

SELECT thumbs_up, id FROM message ORDER BY thumbs_up DESC, id DESC LIMIT $page_size +-----------+----+ | thumbs_up | id | +-----------+----+ | 99 | 14 | | 99 | 2 | | 98 | 18 | | 98 | 15 | | 98 | 13 | +-----------+----+ |

|

| 代码如下 | |

SELECT thumbs_up, id FROM message WHERE thumbs_up <= 98 AND (id < 13 OR thumbs_up< 98) ORDER BY thumbs_up DESC, id DESC LIMIT $page_size +-----------+----+ | thumbs_up | id | +-----------+----+ | 98 | 10 | | 98 | 6 | | 97 | 17 | |

|

查询:

| 代码如下 | |

SELECT * FROM message WHERE thumbs_up <= 98 AND (id < 13 OR thumbs_up < 98) ORDER BY thumbs_up DESC, id DESC LIMIT 20 |

|

| 代码如下 | |

SELECT m2.* FROM message m1, message m2 WHERE m1.id = m2.id AND m1.thumbs_up <= 98 AND (m1.id <13 OR m1.thumbs_up< 98) ORDER BY m1.thumbs_up DESC, m1.id DESC LIMIT 20; |

|

| 代码如下 | |

*************************** 1. row *************************** id: 1 select_type: SIMPLE table: m1 type: range possible_keys: PRIMARY,thumbs_up_key key: thumbs_up_key /* (thumbs_up,id) */ key_len: 4 ref: NULL Rows: 25000020 /* 忽略这里 */ Extra: Using where; Using index /* Cover 译:Cover就是说所需要的数据之从索引里获取就可以满足了 */ *************************** 2. row *************************** id: 1 select_type: SIMPLE table: m2 type: eq_ref possible_keys: PRIMARY key: PRIMARY key_len: 4 ref: forum.m1.id rows: 1 Extra: |

|

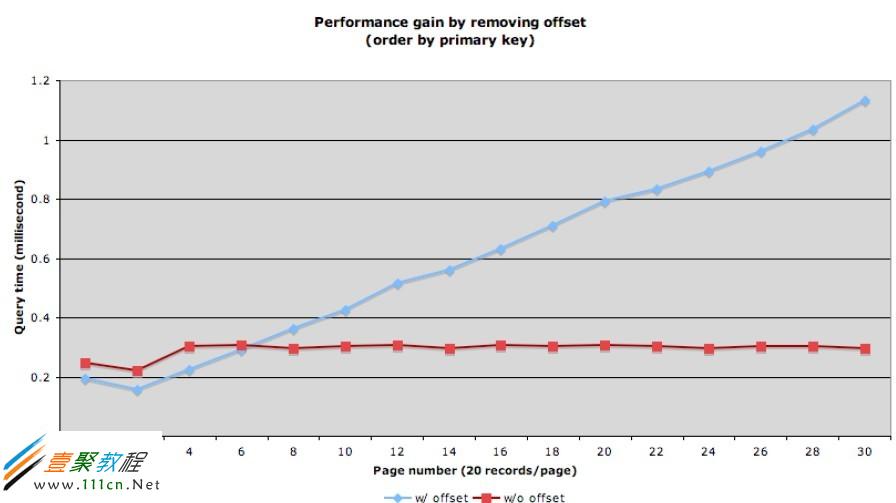

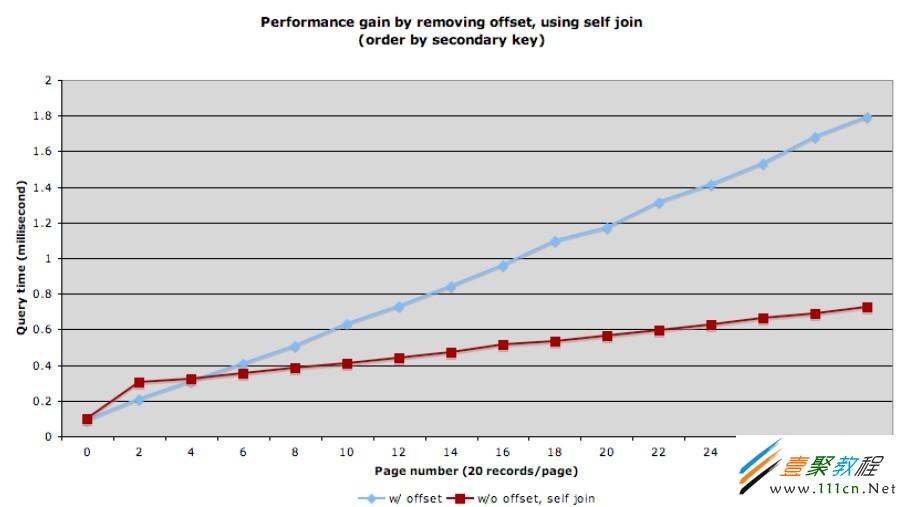

十九、吞吐量提升

每页30条记录,查看第一页的话,使用 LIMIT OFFSET, N方式,可以达到 600 次查询/秒,如果使用 LIMIT N (无偏移)方式,提升到 3.7k 次查询/秒

二十、Bonus Point

Product issue with LIMIT M, N

每页30条记录,查看第一页的话,使用 LIMIT OFFSET, N方式,可以达到 600 次查询/秒,如果使用 LIMIT N (无偏移)方式,提升到 3.7k 次查询/秒

二十、Bonus Point

Product issue with LIMIT M, N

User is reading a page, in the mean time some records may be added to

previous page.

previous page.

Due to insert/delete pages records are going to move forward/backward

as rolling window:

– User is reading messages on 4th page

– While he was reading, one new message posted (it would be there on page

one), all pages are going to move one message to next page.

– User Clicks on Page 5

– One message from page got pushed forward on page 5, user has to read it

again

as rolling window:

– User is reading messages on 4th page

– While he was reading, one new message posted (it would be there on page

one), all pages are going to move one message to next page.

– User Clicks on Page 5

– One message from page got pushed forward on page 5, user has to read it

again

No such issue with news approach

二十一、不足

SEO专家会说:Let bot reach all you pages with fewer number of deep dive

二十一、不足

SEO专家会说:Let bot reach all you pages with fewer number of deep dive

两个解决方案:

Two Solutions:

• Read extra rows

– Read extra rows in advance and construct links for few previous & next pages

• Use small offset

– Do not read extra rows in advance, just add links for few past & next pages

with required offset & last_seen_id on current page

– Do query using new approach with small offset to display desired page

Two Solutions:

• Read extra rows

– Read extra rows in advance and construct links for few previous & next pages

• Use small offset

– Do not read extra rows in advance, just add links for few past & next pages

with required offset & last_seen_id on current page

– Do query using new approach with small offset to display desired page